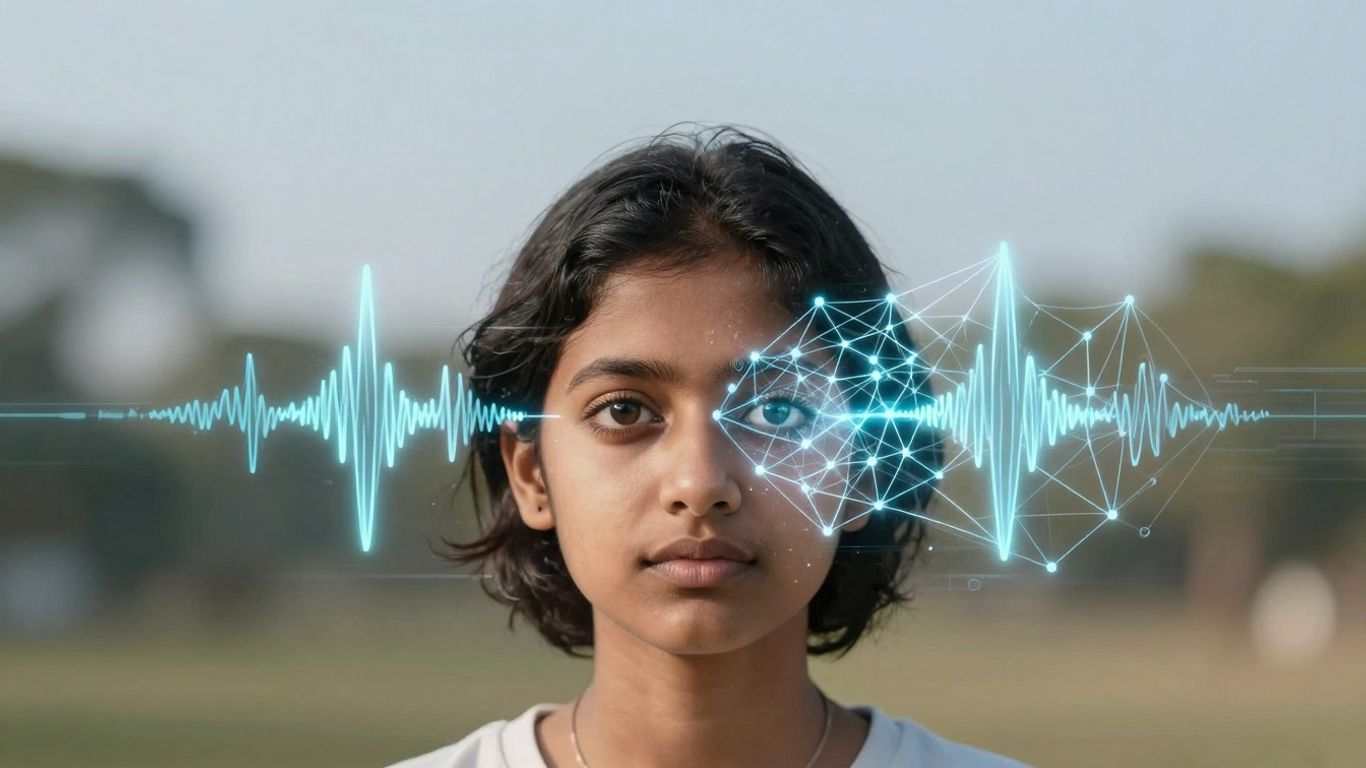

Voice, Vision, and AI: Charting the Future Frontiers of Search

It feels like just yesterday we were typing everything into search engines. Now, things are changing fast. We're talking to our devices, and they're starting to 'see' what we see. This article, 'Voice, Vision, and AI: The Future Frontiers of Search,' looks at how these new ways of interacting with technology are shaping what's next. It's not just about finding information; it's about how we find it and what we can do with it.

Key Takeaways

- AI is making search more natural, moving from simple commands to actual conversations.

- Voice and visual input are becoming just as important as typing for finding things online.

- AI is helping people with disabilities in new ways, making technology more usable for everyone.

- We're seeing AI create art and tell stories with data, opening up new creative paths.

- The next big step for AI in search might be understanding language and images so well it's like talking to another person.

The Evolving Landscape Of Conversational AI

From Rule-Based Systems To Advanced Understanding

Remember when talking to a computer felt like trying to have a conversation with a very confused robot? That was the era of rule-based systems. These early conversational agents, or chatbots, operated on a strict set of pre-programmed rules. If you said X, they responded with Y. It worked for very simple tasks, like answering basic FAQs, but anything outside that narrow scope would quickly break the illusion. It was like trying to follow a script that only had a few lines.

Then came the shift towards more sophisticated methods. Instead of just following rules, these systems started to learn from data. This meant they could handle a wider range of questions and even understand variations in how people phrased things. Think of it as moving from a rigid script to a more flexible outline. This progress was a big deal, allowing for more natural interactions and opening doors for applications beyond simple customer service.

The journey from rigid, predictable responses to systems that can actually grasp the nuances of human language has been a long one, marked by significant leaps in how we teach machines to communicate.

The Rise Of Voice-Based Interaction

For a long time, interacting with computers meant typing. But then, something changed. Suddenly, we could just talk to our devices. This was a game-changer, especially with the advent of smartphones and smart speakers. Voice assistants like Siri, Alexa, and Google Assistant became commonplace. They made technology more accessible, allowing people to multitask or interact without needing to look at a screen or type.

This shift wasn't just about convenience; it was about making technology feel more human. Speaking is our primary mode of communication, so integrating voice into our digital interactions felt natural. It opened up new possibilities for how we get information, control our homes, and even manage our daily schedules. It’s pretty wild to think how quickly we went from typing commands to just asking questions out loud.

Here’s a quick look at how voice interaction has grown:

- Early Days (Pre-2010s): Limited voice recognition, mostly for dictation or very basic commands.

- The Smartphone Era (2010s): Voice assistants become integrated into phones, improving accuracy and usability.

- Smart Speaker Boom (Mid-2010s onwards): Dedicated devices for voice interaction enter homes, driving widespread adoption.

- Current Trends: Voice is becoming a primary interface for many tasks, with continuous improvements in understanding and context.

The Impact Of Large Language Models

If you’ve heard of ChatGPT, you’ve already encountered the power of Large Language Models (LLMs). These are AI systems trained on massive amounts of text data, allowing them to generate human-like text, translate languages, write different kinds of creative content, and answer your questions in an informative way. They represent a huge leap forward in how AI understands and uses language.

LLMs have changed the game for conversational agents. They can handle complex queries, maintain context over longer conversations, and even adapt their tone and style. This means chatbots and voice assistants are no longer just simple tools; they're becoming more like capable partners in conversation. The ability of these models to process and generate language at such a scale is truly remarkable, and it’s still early days for what they can do.

It's fascinating to see how LLMs are impacting various fields:

- Content Creation: Generating articles, scripts, and marketing copy.

- Customer Support: Providing more nuanced and helpful automated responses.

- Education: Acting as tutors or research assistants.

- Software Development: Assisting with code generation and debugging.

The development of LLMs marks a significant turning point, moving conversational AI from a novelty to a genuinely powerful tool.

AI's Transformative Role In Accessibility

Empowering Mobility and Autonomy

Artificial intelligence is really changing the game for people with disabilities, especially when it comes to getting around and doing things for themselves. Think about self-driving cars; the AI behind them gives folks with vision impairments or other mobility challenges more freedom to travel independently. It's not just about cars, though. Devices are popping up that use machine learning to understand facial expressions, letting users control things like wheelchairs just by moving their face. This kind of tech gives people more control over their lives, which is a pretty big deal. It's about breaking down barriers so everyone can participate more fully in daily life. We're seeing AI help create more agency and independence for so many people.

Enhancing Communication and Speech

Communication is another area where AI is making a huge difference. For individuals with progressive illnesses that affect their speech, like ALS, new technologies can help them keep their voice. They can record their own voice, and AI can create a personalized screen reader that sounds just like them. That's pretty amazing. There are also apps that use machine learning to understand speech that might be harder to interpret, like non-standard pronunciations or speech affected by conditions like Parkinson's or cerebral palsy. These tools can then translate that speech into clear audio or text. It means more people can express themselves and connect with others more easily. This technology is really about making sure everyone's voice can be heard.

Supporting Learning and Cognition

When it comes to learning and managing daily tasks, AI is proving to be a real helper. For people who find it hard to stay organized or manage their time, AI can break down big projects into smaller, manageable steps. It can also create personalized schedules and set reminders, which really helps with executive functioning. Large language models, in particular, have a lot of potential for students. They can create summaries of information that are not only accurate but also interactive. This is a big plus for anyone who struggles with attention or focus, making learning more accessible and less overwhelming. AI can act as a personal assistant, helping to reduce cognitive load and make information easier to process. It's about creating a more supportive learning environment for everyone, adapting to individual needs and making information more digestible.

AI is not just about creating new tools; it's about rethinking how we design and interact with technology to be more inclusive from the start. The goal is to build systems that work for everyone, regardless of their abilities, by focusing on human-centered design principles. This means considering the broader impact and ensuring that advancements benefit society as a whole, not just a select few. It's a shift towards creating a more equitable digital world.

Here's a look at some of the ways AI is helping:

- Mobility: Autonomous vehicle AI, smart wheelchairs controlled by facial expressions.

- Communication: Personalized voice synthesis for speech impairments, speech recognition for non-standard speech patterns.

- Learning: Task breakdown for executive function support, interactive summaries for students with attention challenges.

These advancements show how AI can be a powerful partner in creating a more accessible world, aligning with the broader movement towards humanity-centered design. It's about using technology to give people more control and opportunities.

New Frontiers In Data And Creative Expression

AI is really shaking things up when it comes to how we interact with information and how we make art. It's not just about crunching numbers anymore; it's about making data talk and letting creativity flow in new ways. We're seeing AI become a tool that can help anyone express themselves, regardless of their traditional skills.

Interactive Data Storytelling With AI

Imagine looking at a complex chart and being able to just ask it questions. That's what AI is starting to do. Instead of just staring at static graphs, you can have a conversation with your data. AI tools can now read, interpret, and even change information presented visually. This means you can point to a trend and ask, "Why did this spike happen?" or "What's the average for this group?" It's like having a data analyst right there with you, making sense of things in real-time. This kind of interactive data storytelling is a big step forward for understanding complex information.

Generative AI For Artistic Creation

For a long time, if you couldn't draw or paint, your artistic ideas might have stayed just that – ideas. But generative AI is changing that. People can now use simple voice commands or text prompts to have AI create images, music, or even stories. This opens up artistic expression to a much wider audience. Think about someone who has a vivid imagination but lacks the technical drawing skills; they can now bring their visions to life. It's a powerful way to democratize creativity and let more people participate in making art. This technology is still evolving, but its potential for personal and professional creative work is huge. You can find more about how generative AI is changing creative work in articles like this one on HBR.

Expanding Employment Opportunities

AI's impact isn't just on creativity and data; it's also creating new avenues for employment, especially for people with disabilities. Historically, certain jobs might have been out of reach due to physical or cognitive requirements. However, AI-powered tools are breaking down these barriers. For instance, AI in autonomous vehicles can provide greater independence for people with vision impairments. Similarly, AI can assist with tasks that require organization or focus, making a wider range of jobs accessible. This shift is not just about technology; it's about creating a more inclusive workforce where everyone has a chance to contribute and find meaningful work. The goal is to build a future where technology helps bridge gaps, not widen them.

The Future Trajectory Of Conversational Agents

So, where are these chatty computer programs headed? It’s a question on a lot of people’s minds, especially with how fast things are changing. We've moved from clunky, rule-based bots that could barely string a sentence together to systems that can actually hold a decent conversation. The next big leap seems to be towards something closer to what we might call true artificial intelligence.

Towards True Artificial General Intelligence

Right now, most AI is pretty specialized. Your smart speaker is great at playing music, but it can't write a novel. The idea of Artificial General Intelligence (AGI) is about creating AI that can understand, learn, and apply knowledge across a wide range of tasks, much like a human can. Think of it as moving from a highly skilled specialist to a versatile generalist. This is a huge goal, and honestly, we're still a ways off, but the progress is undeniable. Some researchers even point to systems like Google's LaMDA as early steps, though the claims of sentience around it were, let's say, a bit premature.

Self-Learning And Adaptable AI Applications

One of the key ways we'll get closer to AGI is through AI that can learn on its own and adapt in real-time. Instead of needing constant updates and retraining by humans, these future agents will be able to pick up new information and adjust their behavior based on new experiences. This means they could become much more useful in dynamic environments, like helping with complex research or even managing intricate systems. Imagine an AI that learns your preferences not just from what you tell it, but from how you interact with it over time, making it a truly personalized assistant. This kind of adaptability is what makes building a good website so important; you want it to be helpful and understand what users need, perhaps even suggesting things they might like based on past interactions personalizing content.

Understanding Nuance In Natural Language

Even the smartest AI today can stumble over sarcasm, subtle jokes, or implied meanings. The future of conversational agents hinges on their ability to grasp the nuance of human language. This isn't just about understanding the words themselves, but the intent, the emotion, and the context behind them. Getting this right is incredibly complex. It involves understanding cultural references, idioms, and even the unspoken cues that humans pick up on effortlessly.

Here's a look at what that might involve:

- Contextual Awareness: Remembering past conversations and using that information to inform current interactions.

- Emotional Intelligence: Recognizing and responding appropriately to user emotions.

- Intent Recognition: Figuring out what a user really wants, even if they don't state it directly.

The journey towards more sophisticated conversational agents is marked by a continuous push to bridge the gap between machine processing and human-like comprehension. This involves not just processing words, but grasping the subtle layers of meaning that define our communication.

This ongoing evolution means that conversational agents will become more than just tools; they'll start to feel more like genuine partners in our daily lives, assisting us in ways we're only beginning to imagine.

Voice And Vision: Pillars Of Future Search

Think about how you find things now. You type words into a search bar, right? It's worked for ages, but it feels a bit… limited. The next big leap in how we get information is going to blend what we say with what we see. It's not just about asking a question anymore; it's about showing and telling.

The Synergy Of Voice And Visual Input

Imagine you're trying to fix a leaky faucet. Instead of typing "how to fix a leaky faucet," you could just point your phone at the sink and say, "Hey, how do I fix this?" The AI would then use its vision capabilities to identify the faucet type and its audio processing to understand your question. It could then show you a video or step-by-step diagrams tailored to that specific problem. This combination of voice commands and visual context is a game-changer. It makes searching for solutions much more direct and intuitive. This fusion of senses is what will make future search feel less like a chore and more like a conversation with a knowledgeable assistant.

Personalized Search Experiences

AI is getting really good at remembering what you like and how you search. When voice and vision work together, this personalization gets even better. If you've previously searched for recipes using voice and looked at pictures of healthy meals, the AI might suggest a new recipe based on ingredients it sees in your fridge (via your phone's camera) and your past preferences. It's like having a search engine that truly gets you.

Here's a quick look at how personalization might work:

- Contextual Awareness: The AI understands your current location, time of day, and even your mood.

- Past Behavior Analysis: It learns from your previous searches, voice commands, and visual interactions.

- Predictive Assistance: It anticipates what you might need before you even ask.

AI As A Research And Productivity Partner

Beyond just finding answers, AI is becoming a partner in our work and learning. For students, imagine pointing your phone at a complex diagram in a textbook and asking the AI to explain it in simple terms. Or for professionals, showing the AI a product you're interested in and asking it to find similar items with better reviews or lower prices. It's about using AI to cut through the noise and get to what matters, faster. This makes research and everyday tasks feel less like a slog and more like a collaboration.

The goal isn't just to find information, but to understand it and act on it more effectively. By combining our voice and what our eyes see, AI can bridge the gap between the digital world and our physical surroundings, making information more accessible and actionable than ever before.

The future of searching is all about how we see and understand things. It's like having a super smart assistant that gets what you really mean, not just the words you type. This means finding what you need online will be faster and more accurate than ever before. Want to make sure your business is found in this new era of search? Visit our website today to learn how we can help you stand out!

Looking Ahead

So, where does all this leave us? It's pretty clear that voice and vision tech, powered by AI, aren't just cool gadgets anymore. They're becoming a bigger part of how we find information and interact with the world. Think about how much easier it is for people with certain disabilities to get around or communicate now, thanks to these tools. We're seeing AI help with everything from driving cars to making art, and it's only going to grow. The next few years will likely bring even more changes, making our digital lives feel more natural and maybe even a bit more human. It’s an exciting time to see what comes next.

Frequently Asked Questions

What is conversational AI?

Conversational AI is like a smart computer program that can chat with you using regular language, kind of like talking to a friend. It's gone from just following simple commands to understanding what you mean, even if you say things in different ways.

How is AI helping people with disabilities?

AI is a game-changer for accessibility! It can help people with physical challenges control wheelchairs with their minds, create personalized voices for those who can't speak, and even help students with focus by breaking down big tasks into smaller, manageable steps.

Can AI create art?

Yes, absolutely! Generative AI can take your ideas and turn them into amazing art, music, or writing. This means people who might not be able to draw or paint can still bring their creative visions to life.

What are 'Large Language Models' (LLMs)?

Think of LLMs as super-smart AI brains that have read a huge amount of text. This allows them to understand and generate human-like language, making conversations with AI much more natural and helpful, like getting quick summaries of complex topics.

How will AI change the way we search for information?

AI is making search more intuitive. Instead of just typing keywords, you can talk to AI assistants and even use images to find what you're looking for. It's like having a personal assistant that understands both your voice and what you're seeing.

Will AI take over jobs?

While AI will change some jobs, it's also creating new opportunities, especially in fields related to AI development and maintenance. Plus, AI tools can help people with disabilities find and succeed in more jobs than ever before.

Comments

Post a Comment